Key Takeaways

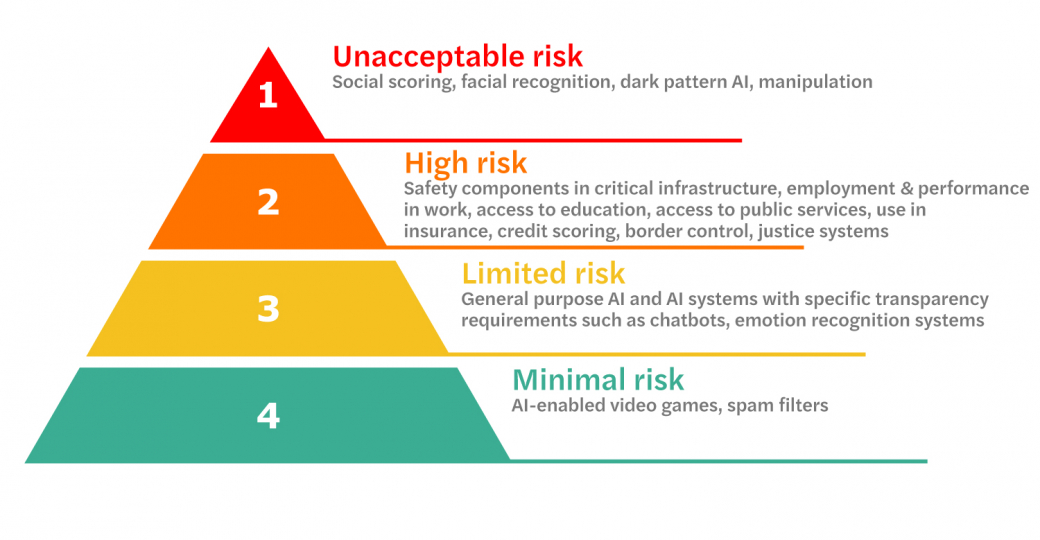

- AI regulation is evolving globally with the EU AI Act leading comprehensive frameworks that classify AI systems based on risk levels, from unacceptable to minimal risk.

- Key ethical principles driving regulation include transparency, fairness, human oversight, privacy protection, and accountability—all aimed at ensuring AI benefits society without causing harm.

- Organizations developing or deploying AI systems must implement practical compliance measures including documentation, impact assessments, and continuous monitoring.

- The regulatory landscape varies significantly between regions, with the EU adopting a strict risk-based approach, the US pursuing sector-specific regulation, and China focusing on national security and social stability.

- Industry self-regulation through standards bodies like IEEE and ISO plays a crucial role in establishing best practices for responsible AI development.

“AI Regulation: A Step Forward or Ethics …” from www.spiceworks.com and used with no modifications.

As artificial intelligence transforms industries and societies worldwide, the regulatory landscape is rapidly evolving to address both unprecedented opportunities and risks. Navigating this complex terrain has become essential for organizations developing or deploying AI systems. With regulatory frameworks taking shape across jurisdictions, understanding the intersection of AI regulation and ethics is no longer optional—it’s a business imperative.

Why AI Regulation Matters Right Now

AI regulation has moved from theoretical discussions to concrete policy implementation. This shift comes as AI systems increasingly make consequential decisions affecting human lives—from determining credit worthiness to diagnosing medical conditions and powering autonomous vehicles. Without appropriate guardrails, these powerful technologies risk causing significant harm while undermining public trust in AI innovation.

As Market Business Watch has observed in analyzing market responses to regulatory announcements, forward-thinking companies aren’t waiting for regulations to be finalized before implementing ethical AI practices. Those proactively addressing ethical concerns are positioning themselves advantageously in markets increasingly sensitive to responsible technology use.

Real Risks of Unregulated AI Systems

Unregulated AI systems present tangible risks that extend beyond hypothetical scenarios. Biased algorithms have demonstrably led to discriminatory lending practices, unfair hiring decisions, and inequitable criminal justice outcomes. Facial recognition technologies deployed without proper safeguards have raised serious privacy concerns and civil liberties issues. Meanwhile, generative AI systems can produce convincing misinformation at unprecedented scale.

Data protection vulnerabilities in AI systems have resulted in significant breaches affecting millions. In healthcare, AI diagnostic tools operating without appropriate validation have produced dangerous false negatives and positives. These real-world harms underscore why thoughtful regulation has become urgent rather than speculative.

The opacity of many AI systems compounds these risks. When organizations deploy black-box algorithms without understanding their decision-making processes, accountability becomes nearly impossible. This opacity creates accountability gaps that regulations aim to close through various transparency and documentation requirements.

The Balance Between Innovation and Safety

Effective AI regulation must strike a delicate balance: providing sufficient protection against harms while enabling continued innovation. This tension defines much of the current regulatory discourse. Overly restrictive regulations risk stifling technological progress and competitive advantage, while insufficient oversight exposes society to preventable harms. For more insights on AI ethics, you can explore the UNESCO’s recommendation on AI ethics.

Regulatory Balance Framework

Regulatory approaches must balance multiple competing priorities:

- Protection of fundamental rights vs. technological advancement

- Certainty for businesses vs. flexibility for innovation

- Prescriptive rules vs. outcome-based requirements

- National/regional interests vs. international harmonization

Most emerging regulatory frameworks attempt to navigate this balance through risk-based approaches. By calibrating regulatory requirements to an AI system’s potential for harm, these frameworks aim to apply proportionate oversight. High-risk applications face more stringent requirements, while lower-risk systems encounter fewer regulatory hurdles.

Who’s Responsible When AI Goes Wrong?

The question of responsibility and liability represents one of the most challenging aspects of AI regulation. When an autonomous vehicle causes an accident, is the manufacturer, software developer, data provider, or vehicle owner responsible? Traditional liability frameworks struggle to address AI systems where decision-making is distributed across multiple actors and emerges from complex, sometimes unpredictable interactions.

Regulatory approaches are evolving to address this accountability gap. The EU AI Act, for instance, places significant responsibilities on AI providers while recognizing the role of deployers. In high-risk contexts, joint liability models are gaining traction, with various stakeholders bearing responsibility proportionate to their control over the system.

This shift toward distributed responsibility reflects the reality of modern AI development and deployment. No single entity typically controls the entire AI lifecycle, from data collection through model development to deployment and monitoring. Effective regulation must therefore create accountability frameworks that map to this distributed reality while ensuring that affected individuals have clear recourse when harms occur. For a comprehensive understanding of these frameworks, you can refer to the Recommendation on the Ethics of AI.

- Providers must ensure their AI systems comply with applicable requirements before deployment

- Deployers bear responsibility for using systems as intended and implementing required oversight

- End users may share responsibility when misusing systems against clear guidelines

- Regulatory authorities hold enforcement powers to address non-compliance

- Insurance markets are developing new products to address AI liability risks

Global AI Regulation Landscape

“Global AI Regulation in 2024 …” from now.digital and used with no modifications.

The global regulatory landscape for AI reflects diverse cultural, legal, and economic priorities. While some jurisdictions favor comprehensive frameworks, others pursue sector-specific or principle-based approaches. This regulatory diversity creates compliance challenges for organizations operating globally, as they must navigate varying and sometimes conflicting requirements.

The EU AI Act: What You Need to Know

The European Union’s AI Act represents the world’s first comprehensive regulatory framework specifically designed for artificial intelligence. Finalized in 2024, this landmark legislation takes a risk-based approach to AI governance, categorizing systems based on their potential impact on safety, rights, and well-being. The Act applies not only to EU-based developers but to any organization deploying AI systems within EU territory, giving it significant global influence.

At its core, the EU AI Act prohibits certain AI applications deemed to present unacceptable risk, including social scoring systems, emotion recognition in educational or workplace settings, and untargeted facial recognition in public spaces. For high-risk applications in sectors like healthcare, transportation, and justice, the Act mandates rigorous requirements including risk assessments, human oversight mechanisms, and detailed technical documentation.

Compliance timelines vary based on risk category, with the most stringent requirements phased in first. Organizations developing or deploying AI systems for the European market should begin preparation immediately, as penalties for non-compliance can reach up to 7% of global annual turnover for the most serious violations—exceeding even GDPR penalties.

US Approach to AI Oversight

The United States has pursued a more decentralized approach to AI regulation, emphasizing sector-specific rules and voluntary frameworks. Rather than enacting comprehensive AI legislation, U.S. regulators have incorporated AI oversight into existing regulatory structures across fields like healthcare, financial services, and transportation. This approach provides flexibility but creates a patchwork of requirements that organizations must navigate.

For those looking to leverage technology in innovative ways, exploring side hustles using AI tools can offer new opportunities.

At the federal level, the Biden Administration’s Executive Order on Safe, Secure, and Trustworthy AI established guardrails for federal agencies developing and deploying AI systems while directing sector-specific regulators to address AI risks within their domains. Meanwhile, states like California, Colorado, and Illinois have enacted their own AI-related legislation, particularly addressing privacy concerns, algorithmic transparency, and biometric data protection.

Industry standards and voluntary frameworks play a particularly important role in the U.S. context. Organizations like NIST have developed AI risk management frameworks that, while not legally binding, are increasingly referenced in procurement requirements and may influence future regulatory development. Companies operating in the U.S. market should monitor both federal guidance and state-level developments while participating in relevant standards-setting processes.

China’s AI Governance Framework

China has developed a distinctive approach to AI governance that balances promoting technological leadership with ensuring alignment with national security and social stability objectives. The country’s regulatory framework combines binding regulations with government-led standards and ethical guidelines. Most notably, China has implemented some of the world’s first regulations specifically addressing generative AI and recommendation algorithms.

The 2022 Provisions on Deep Synthesis Internet Information Services established requirements for AI-generated content, including clear labeling of synthetic media and mechanisms to prevent deepfakes. Meanwhile, the 2021 Algorithm Recommendation Management Provisions imposed transparency and user control requirements on recommendation systems. These targeted regulations demonstrate China’s willingness to address specific AI applications presenting immediate societal concerns.

China’s approach emphasizes the role of the state in guiding AI development toward national priorities while managing associated risks. Organizations operating in the Chinese market must navigate both explicit regulations and implicit expectations regarding data security, content controls, and alignment with industrial policies—a complex environment requiring local expertise and careful compliance planning. As AI continues to evolve, the integration of technologies like 5G and AI connectivity plays a crucial role in shaping future innovations and trends in the region.

How Different Countries Are Tackling AI

Global AI Regulatory Approaches

Region Regulatory Approach Key Features Implementation Status European Union Comprehensive regulation Risk-based classification, prohibited applications, strict requirements for high-risk AI Implemented, phased compliance United States Sector-specific regulation Existing regulatory frameworks, voluntary standards, state-level initiatives Evolving, fragmented China Targeted regulation Focus on specific applications (generative AI, recommendation systems), national security Active implementation Canada Rights-based approach Artificial Intelligence and Data Act, emphasis on transparency and risk mitigation Pending implementation Singapore Voluntary frameworks AI Governance Framework, sector-specific guidelines, certification program Established, voluntary

Beyond major powers, countries worldwide are developing distinct approaches to AI governance. Canada’s Artificial Intelligence and Data Act takes a rights-based approach focused on transparency and harm prevention. Singapore has pioneered voluntary frameworks accompanied by certifications that provide market incentives for responsible AI. Japan emphasizes human-centric AI development through non-binding principles and international cooperation initiatives.

Core Ethical Principles Guiding AI Regulation

“Ethics of Artificial Intelligence | UNESCO” from www.unesco.org and used with no modifications.

Despite the diversity in regulatory approaches, core ethical principles have emerged as common foundations for AI governance frameworks worldwide. These principles provide the normative basis for specific legal requirements and establish a shared language for discussing AI ethics across cultural and jurisdictional boundaries. Understanding these principles helps organizations anticipate regulatory trends and build AI systems aligned with societal values.

While terminology varies between frameworks, most incorporate variations of transparency, fairness, accountability, human autonomy, and privacy. These principles manifest differently across regulations but serve as conceptual bridges between ethical discourse and legal requirements. Organizations developing responsible AI programs should consider how their governance structures and technical approaches address each of these core principles.

Transparency Requirements for AI Systems

Transparency has emerged as a foundational principle in AI regulation, addressing the “black box” problem that has undermined trust and accountability. Regulatory frameworks increasingly require that AI systems be explainable to varying degrees depending on their context and risk level. This principle typically manifests in requirements for technical documentation, model explainability, and user notification.

The EU AI Act exemplifies this approach by requiring high-risk AI systems to include sufficient technical documentation to demonstrate compliance and enable meaningful human oversight. Systems must be designed to enable users to interpret outputs and understand the decision-making process to an appropriate degree. Meanwhile, GDPR’s provisions on automated decision-making establish rights to “meaningful information about the logic involved” when such systems produce legal or similarly significant effects.

Practical implementation of transparency requirements varies by context. Medical AI systems may require detailed explainability to enable clinician oversight, while consumer-facing applications might satisfy requirements through clear disclosure of AI use and limitations. Organizations should assess transparency needs across their AI portfolio, considering both regulatory requirements and user expectations in each deployment context.

Fairness and Non-Discrimination Standards

Fairness and non-discrimination principles address AI’s potential to perpetuate or amplify historical biases. Regulations increasingly require organizations to identify, measure, and mitigate bias in AI systems, particularly in high-stakes domains like employment, lending, housing, and criminal justice. These requirements typically go beyond prohibiting intentional discrimination to address disparate impact—where seemingly neutral systems produce significantly different outcomes across protected groups.

- Pre-deployment bias testing using diverse datasets and multiple fairness metrics

- Documentation of design choices made to prevent discriminatory outcomes

- Ongoing monitoring of system performance across demographic groups

- Regular audits to identify potential emergent biases in deployed systems

- Mitigation strategies when disparities are detected, including model updates

Implementing fairness requirements presents technical challenges, as fairness itself has multiple, sometimes conflicting definitions. Organizations must make contextual judgments about which fairness metrics are most appropriate for specific applications. These decisions should be documented as part of the AI system’s design process, explaining the rationale for chosen approaches and acknowledging inherent limitations and tradeoffs.

Beyond technical approaches, addressing fairness requires diverse teams, stakeholder engagement, and domain expertise. Organizations should involve affected communities in the design and evaluation process, particularly for systems affecting vulnerable populations. This participatory approach helps identify potential harms that might be overlooked in purely technical assessments and builds trust with affected stakeholders.

Human Oversight and Control Mechanisms

Human oversight represents a central pillar of ethical AI regulation, ensuring that automated systems remain under meaningful human control. Regulatory frameworks increasingly mandate human oversight proportionate to a system’s risk level and application context. This principle manifests in requirements ranging from “human-in-the-loop” designs where humans must approve key decisions to “human-on-the-loop” models enabling intervention in operational systems.

The EU AI Act codifies this principle by requiring high-risk AI systems to include appropriate human oversight measures. These measures must enable humans to fully understand system capabilities and limitations, recognize automation bias, correctly interpret outputs, and override decisions when necessary. This approach acknowledges that effective oversight requires both technical design features and organizational processes ensuring that humans have both the authority and capability to exercise meaningful control.

Organizations implementing human oversight should consider how to balance automation benefits with appropriate control mechanisms. This requires careful interface design making system limitations transparent, ongoing training for oversight personnel, and organizational structures that value and support human judgment. Well-designed oversight mechanisms prevent harmful outcomes while preserving efficiency gains from automation.

Privacy Protection in the Age of AI

AI systems frequently process vast quantities of personal data, creating unique privacy challenges that regulators are increasingly addressing. While general data protection laws like GDPR already apply to AI processing, emerging AI-specific regulations add requirements tailored to machine learning’s distinctive characteristics. These include provisions addressing inferences, synthetic data, and model transparency.

Privacy-by-design requirements mandate that AI systems incorporate privacy protections from the earliest design stages rather than as afterthoughts. For high-risk systems, this often includes data minimization strategies, purpose limitations, and robust security measures. Additionally, regulations increasingly require privacy impact assessments specifically addressing AI-related risks, such as the potential for re-identification or unauthorized inference of sensitive attributes.

Technical approaches like federated learning, differential privacy, and synthetic data generation are gaining regulatory recognition as privacy-enhancing technologies. Organizations should evaluate these approaches as potential compliance tools while recognizing that technical measures alone are insufficient without appropriate governance frameworks. Comprehensive privacy protection requires integrating technical, organizational, and contractual safeguards throughout the AI lifecycle.

Accountability Frameworks for AI Developers

Accountability principles place responsibility on organizations to demonstrate compliance with regulatory requirements and address harms that may arise from AI systems. This principle has manifested in requirements for comprehensive documentation, risk management systems, and governance structures. The goal is to ensure that organizations can both prevent harms and respond effectively when incidents occur.

For those looking to explore additional AI applications, check out some side hustles using AI tools.

Modern accountability frameworks typically require organizations to maintain documentation covering the entire AI lifecycle. This includes records of data sources and processing, model development decisions, validation testing, deployment procedures, and monitoring activities. Such documentation serves both compliance purposes and enables meaningful incident investigation when problems arise.

Beyond documentation, accountability frameworks typically require defined responsibility structures within organizations. This includes designating individuals with authority for AI oversight, establishing clear escalation paths for identified risks, and implementing processes for addressing stakeholder concerns. Organizations operating in regulated environments should establish these governance structures proactively, regardless of whether specific regulations currently mandate them in their jurisdiction.

Risk-Based Classification of AI Systems

“EU AI Act: different risk levels of AI …” from www.forvismazars.com and used with no modifications.

The risk-based approach to AI regulation has emerged as the dominant paradigm in regulatory frameworks worldwide. This approach recognizes that not all AI applications present equal risks and calibrates regulatory requirements accordingly. Understanding risk classification frameworks is essential for organizations to anticipate compliance obligations and allocate resources appropriately.

While classification specifics vary between jurisdictions, most frameworks distinguish between multiple risk tiers, ranging from unacceptable to minimal. Higher-risk categories trigger more stringent requirements, while lower-risk applications face fewer obligations. Organizations should conduct preliminary risk assessments of their AI portfolio to identify which systems may fall into regulated categories and prioritize compliance efforts accordingly.

Unacceptable Risk: Banned AI Applications

At the highest risk level, some AI applications face outright prohibition under emerging regulatory frameworks. The EU AI Act establishes a category of “unacceptable risk” applications that are banned from the European market entirely due to their fundamental incompatibility with European values and fundamental rights. Similar prohibitions are emerging in other jurisdictions, though specific banned applications vary.

Prohibited applications typically include those exploiting vulnerabilities of specific groups, enabling social scoring by governments, using subliminal manipulation techniques, or deploying real-time remote biometric identification in public spaces (with limited exceptions). Organizations should thoroughly screen proposed AI applications against these prohibited categories during initial conception phases to avoid investing in systems that may face regulatory barriers.

The boundaries of prohibited categories remain subject to interpretation and will likely evolve through regulatory guidance and case law. Organizations operating in sensitive domains should monitor regulatory developments closely and adopt conservative interpretations when uncertainties exist. When contemplating novel applications that may approach prohibited categories, early engagement with regulatory authorities can provide valuable clarity.

High-Risk AI: Strict Regulatory Requirements

High-risk AI systems face the most stringent regulatory requirements while remaining permissible with appropriate safeguards. This category typically includes applications in domains like healthcare, transportation, law enforcement, critical infrastructure, and employment. Systems qualifying as high-risk must comply with comprehensive requirements spanning design, documentation, data governance, and ongoing monitoring. For a detailed understanding of these requirements, you can refer to the UNESCO’s Recommendation on AI Ethics.

The EU AI Act exemplifies this approach by imposing extensive obligations on high-risk AI providers, including implementing risk management systems, ensuring data quality, maintaining comprehensive technical documentation, enabling human oversight, and achieving appropriate accuracy and robustness. Similar requirements appear in other jurisdictions, though with varying scope and specificity.

For those interested in exploring innovative opportunities, there are best side hustles using AI tools that align with these regulatory frameworks.

Organizations developing high-risk AI applications should establish compliance processes early in the development lifecycle. This includes conducting thorough risk assessments, implementing quality management systems, preparing required documentation, and designing appropriate testing protocols. Early compliance planning reduces the need for costly redesigns later and positions systems advantageously for regulatory review.

Limited Risk: Transparency Obligations

Limited-risk AI applications face lighter regulatory requirements focused primarily on transparency. This category typically includes systems interacting directly with individuals, such as chatbots, emotion recognition systems, and deep fake generators. While not subject to the comprehensive requirements imposed on high-risk applications, these systems must still meet disclosure obligations ensuring users understand they are interacting with AI.

Transparency requirements for limited-risk AI generally include disclosing that content is AI-generated, informing users when interacting with AI systems rather than humans, and providing clear information about a system’s capabilities and limitations. These requirements help individuals make informed choices about engaging with AI and set appropriate expectations about system performance.

Organizations deploying limited-risk AI should develop standardized disclosure practices that satisfy regulatory requirements while maintaining positive user experiences. This might include designing natural notification flows within user interfaces, providing layered information allowing users to access additional details as desired, and using clear, non-technical language accessible to the intended audience.

Minimal Risk: Light-Touch Regulation

Minimal-risk AI applications face the lightest regulatory touch, with most frameworks imposing few if any specific obligations beyond those applicable to software generally. This category typically includes applications like spam filters, inventory management systems, and basic process automation tools where potential harms are limited. Organizations deploying such systems retain flexibility in their development approaches but may voluntarily adopt appropriate safeguards as a matter of good practice.

While minimal-risk applications may not trigger specific AI regulatory requirements, they remain subject to general legal frameworks governing data protection, consumer protection, and product liability. Organizations should maintain awareness of these underlying obligations while benefiting from the regulatory flexibility afforded to lower-risk applications.

Even for minimal-risk applications, voluntary adoption of AI ethics principles and governance practices can provide business benefits. These include enhanced user trust, reduced reputational risks, and readiness for potential regulatory changes. Organizations may consider applying scaled-down versions of high-risk governance practices to lower-risk applications as appropriate to their specific context.

Industry Self-Regulation and Standards

“AI Standards by ISO/IEC, AAMI & IEEE …” from www.linkedin.com and used with no modifications.

Beyond formal regulations, industry standards play a crucial role in establishing technical specifications and best practices for responsible AI. These standards provide practical guidance for implementing regulatory requirements and often influence regulatory development. Organizations should actively engage with relevant standards bodies to both shape emerging standards and prepare for their adoption.

Industry standards offer several advantages complementing formal regulation. They can evolve more rapidly than legislation, incorporate detailed technical specifications beyond regulatory capacity, and promote global interoperability. Many regulatory frameworks explicitly reference standards as mechanisms for demonstrating compliance with broader principles, making standards knowledge essential for regulatory navigation.

IEEE Global Initiative on Ethics of AI

The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems has developed influential standards addressing ethical considerations in AI design and deployment. Most notably, IEEE 7000 series standards provide detailed frameworks for ethically aligned design, transparency, algorithmic bias, data privacy, and other critical aspects of responsible AI. These standards offer concrete implementation guidance for organizations seeking to operationalize ethical principles.

IEEE P7001 on transparency provides metrics and methods for assessing AI system transparency across different stakeholder needs. IEEE P7003 on algorithmic bias offers methodologies for identifying and mitigating discriminatory effects. Other standards in the series address specific aspects like data privacy (P7002), safety (P7009), and fail-safe design (P7000). Organizations can use these standards as practical tools for implementing ethical principles and preparing for regulatory compliance.

The IEEE standards development process involves diverse stakeholders including industry, academia, civil society, and government representatives. This multi-stakeholder approach produces standards that balance different perspectives and interests, increasing their utility and acceptance. Organizations should consider participating in these development processes to ensure standards reflect practical implementation realities. For more insights, explore the Recommendation on the Ethics of AI by UNESCO.

ISO/IEC Standards for AI

The International Organization for Standardization (ISO) and International Electrotechnical Commission (IEC) have established Joint Technical Committee 1/Sub Committee 42 focused specifically on artificial intelligence standardization. This committee is developing standards addressing AI terminology, governance, trustworthiness, and technical specifications that are gaining global recognition and regulatory reference. For those interested in the broader implications of AI, exploring future innovations and trends in AI connectivity can provide valuable insights.

ISO/IEC 42001 provides an AI management system standard modeled after successful frameworks like ISO 9001 for quality management. This standard establishes requirements for organizational governance of AI, including policy development, risk management, and continuous improvement processes. Organizations implementing ISO/IEC 42001 create management systems that can demonstrate responsible AI practices to regulators, customers, and other stakeholders.

Additional standards in the series address specific aspects of AI development and deployment. ISO/IEC 23894 provides guidance on risk management for AI systems. ISO/IEC 5338 addresses AI lifecycle considerations. ISO/IEC TR 24028 offers a trustworthiness overview for AI systems. These standards provide detailed technical guidance beyond regulatory requirements and can help organizations implement comprehensive AI governance frameworks.

For those looking to explore more AI-related opportunities, consider side hustles using AI tools to enhance your business strategies.

Corporate AI Ethics Boards: Do They Work?

Many organizations have established AI ethics boards or committees as self-regulatory mechanisms to provide oversight of AI development and deployment. These bodies typically include diverse expertise spanning technical, ethical, legal, and domain-specific knowledge. While potentially valuable governance tools, their effectiveness varies significantly based on structure, authority, and implementation.

Research indicates that successful AI ethics boards share several characteristics. They require clear charters defining scope, authority, and decision-making processes. They need diverse membership extending beyond technical experts to include perspectives from affected stakeholders and ethics specialists. Most importantly, they must possess meaningful authority to influence development decisions, including the power to delay or prevent deployments presenting significant ethical concerns.

Organizations considering establishing AI ethics boards should examine lessons from both successful implementations and notable failures. Failed ethics boards have typically suffered from limited authority, inadequate resources, unclear processes, or conflicts of interest undermining their independence. Effective boards, conversely, integrate with existing governance structures, receive strong executive support, and maintain appropriate independence from business pressure.

Practical Compliance Steps for AI Developers

Beyond understanding regulatory principles, organizations need practical guidance for implementing compliance measures throughout the AI lifecycle. While specific requirements vary by jurisdiction and risk level, common compliance elements have emerged across frameworks. Organizations should adapt these elements to their specific context while maintaining awareness of jurisdiction-specific obligations.

Effective compliance programs integrate regulatory requirements into existing development and governance processes rather than treating them as separate activities. This integration helps organizations maintain compliance without unnecessarily disrupting innovation. It also ensures that compliance considerations inform design decisions from the earliest stages, reducing the need for costly modifications later.

Required Documentation for AI Systems

Documentation requirements feature prominently in AI regulatory frameworks, particularly for high-risk applications. These requirements serve multiple purposes: enabling regulatory oversight, facilitating internal governance, supporting incident investigation, and providing transparency to affected stakeholders. Organizations should establish systematic documentation practices covering the entire AI lifecycle.

Key documentation typically includes system purpose and risk assessment, data provenance and processing records, model development methodology, validation testing results, deployment procedures, and monitoring plans. For high-risk systems, documentation must typically demonstrate how the system addresses specific regulatory requirements such as accuracy, robustness, and human oversight. This documentation should be maintained throughout a system’s lifecycle and updated when significant changes occur.

Organizations should develop standardized documentation templates and processes aligned with applicable regulatory requirements. These templates should strike a balance between comprehensiveness and usability, capturing essential information without creating excessive administrative burden. Documentation systems should integrate with existing development tools and processes where possible to minimize duplication and ensure accuracy.

For those looking to enhance their documentation systems, exploring SEO automation tools can provide valuable insights and efficiencies.

How to Conduct AI Impact Assessments

AI impact assessments have emerged as a key regulatory tool for identifying and mitigating potential harms before deployment. These structured evaluations examine a system’s potential effects on individuals, groups, and broader society, with particular attention to fundamental rights, safety, and ethical considerations. While specific assessment requirements vary between frameworks, common elements have emerged across jurisdictions.

Effective impact assessments follow a systematic process: defining the system’s purpose and context, identifying stakeholders and potential impacts, evaluating risks across multiple dimensions, developing mitigation measures, and establishing ongoing monitoring mechanisms. This process should involve diverse perspectives including technical experts, legal advisors, ethics specialists, and representatives of potentially affected groups. Documentation of both the assessment process and resulting mitigation measures typically forms part of regulatory compliance.

Organizations should integrate impact assessments into their development lifecycle, conducting initial assessments during early design phases and updating them as systems evolve. This iterative approach ensures that impact considerations inform design decisions throughout development rather than serving merely as retrospective compliance exercises. For high-risk applications, impact assessments should undergo independent review to identify potential blindspots or biases in the assessment process itself.

Testing Requirements for High-Risk AI

Testing requirements for high-risk AI systems extend beyond traditional software quality assurance to address AI-specific concerns including accuracy, robustness, bias, and safety. Regulatory frameworks typically mandate comprehensive testing before deployment and periodic retesting throughout a system’s operational life. Organizations should develop testing protocols addressing both technical performance and real-world impact dimensions.

Comprehensive testing protocols typically include validation against representative test data, stress testing with adversarial inputs, bias assessment across demographic groups, and performance evaluation under varied conditions. For particularly sensitive applications, regulatory frameworks may require independent testing by accredited third parties. Test results must typically be thoroughly documented, including methodology, datasets, performance metrics, identified limitations, and mitigation measures for any discovered issues.

Organizations should adopt testing approaches appropriate to their specific context while ensuring alignment with regulatory expectations. This includes selecting appropriate performance metrics, defining acceptable performance thresholds, and establishing clear procedures for addressing identified issues. Testing protocols should evolve as systems are updated and as new testing methodologies emerge within the field.

Ongoing Monitoring Obligations

AI regulatory frameworks increasingly recognize that compliance cannot end at deployment but must continue throughout a system’s operational life. Many regulations impose ongoing monitoring obligations requiring organizations to track system performance, identify emerging issues, and implement necessary adjustments. These requirements acknowledge AI systems’ capacity to evolve in unexpected ways when exposed to real-world data and usage patterns.

Effective monitoring programs combine technical and organizational elements. Technical monitoring includes tracking performance metrics, detecting drift from expected behavior, and identifying potential biases or safety issues. Organizational monitoring includes maintaining incident reporting mechanisms, conducting periodic reviews, and ensuring appropriate escalation paths for identified concerns. Both elements require clear documentation and designated responsibility within the organization.

Organizations should design monitoring systems proportionate to their AI applications’ risk level and complexity. High-risk systems require robust, continuous monitoring with clear thresholds triggering review or intervention. Lower-risk systems may allow lighter monitoring approaches while still maintaining awareness of potential issues. All monitoring should feed into continuous improvement processes, with lessons learned incorporated into future development and deployment.

The Future of AI Governance

The AI regulatory landscape continues to evolve rapidly, with frameworks maturing and new approaches emerging. Organizations must maintain awareness of developing trends to anticipate future requirements and position themselves advantageously within the evolving governance ecosystem. Several clear directions are emerging that will likely shape AI regulation in coming years.

Regulatory approaches will likely become increasingly sophisticated, moving beyond broad principles to detailed requirements tailored to specific AI applications and contexts. This evolution will require organizations to develop more nuanced compliance strategies addressing the particular characteristics of their AI systems rather than applying one-size-fits-all approaches. Staying ahead of this evolution requires ongoing regulatory monitoring and active engagement with developing governance frameworks.

Emerging Regulatory Trends to Watch

Several regulatory trends are gaining momentum and likely to influence future governance frameworks. Algorithmic impact assessments are becoming more standardized and may become mandatory for a wider range of applications. Certification schemes providing independent verification of AI systems’ compliance with regulatory requirements are emerging, potentially creating market advantages for certified systems. Regulatory sandboxes offering controlled testing environments for innovative AI applications are gaining popularity as mechanisms balancing innovation and oversight.

Sectoral regulation is likely to increase, with domain-specific requirements supplementing horizontal frameworks. Financial services, healthcare, transportation, and public sector applications are particularly likely to see tailored regulatory approaches addressing their specific risk profiles and operational contexts. Organizations operating in these sectors should monitor both general AI regulations and domain-specific developments.

Regulatory attention to foundation models and generative AI is rapidly increasing, with frameworks evolving to address their unique characteristics and risks. Future regulations will likely impose specific requirements on these systems’ developers while creating shared responsibility frameworks recognizing the distributed nature of their development and deployment. Organizations throughout the AI supply chain should prepare for these emerging regulatory approaches.

International Coordination Efforts

As AI regulation develops globally, international coordination efforts are expanding to promote regulatory interoperability and prevent fragmentation. Organizations including the OECD, G7, Global Partnership on AI, and UNESCO are developing frameworks and principles intended to inform national regulations and promote consistent approaches. These efforts aim to reduce compliance complexity for global organizations while ensuring comprehensive protection against AI risks.

The OECD AI Principles have been particularly influential, providing a common reference point for numerous national frameworks. The Council of Europe’s work on an AI treaty represents another significant coordination effort that may establish binding international standards. Meanwhile, bilateral discussions between major jurisdictions like the EU and US seek to address regulatory alignment while respecting different legal traditions and priorities.

Despite these coordination efforts, significant regulatory divergence remains likely due to differing cultural values, legal systems, and strategic priorities. Organizations operating globally should prepare for this continued divergence by developing modular compliance approaches adaptable to varying requirements while advocating for greater harmonization through industry associations and policy engagement.

The Role of Technical Standards in Regulation

Technical standards will play an increasingly central role in AI governance, serving as bridges between high-level regulatory principles and practical implementation requirements. Many regulatory frameworks explicitly reference standards as mechanisms for demonstrating compliance, creating a two-tier governance system where regulations establish broad requirements while standards provide detailed implementation guidance. For instance, the emergence of 5G AI connectivity is a trend that underscores the need for robust technical standards to ensure seamless integration and compliance.

This approach offers several advantages. Standards can evolve more rapidly than legislation, allowing governance to keep pace with technological development. They can incorporate technical specificity beyond regulatory capacity, providing concrete guidance for implementation. And they can promote global interoperability even when regulations differ between jurisdictions, reducing compliance complexity for international organizations.

Organizations should actively engage with relevant standards development processes to both shape emerging standards and prepare for their adoption. This engagement provides visibility into developing governance approaches while ensuring standards reflect practical implementation realities. It also positions organizations advantageously as standards gain regulatory recognition and market importance.

Frequently Asked Questions

As organizations navigate the complex landscape of AI regulation and ethics, certain questions arise consistently. These frequently asked questions reflect common concerns about compliance requirements, implementation approaches, and regulatory developments. Understanding these issues helps organizations develop effective governance strategies while avoiding common pitfalls.

The answers provided here reflect current understanding but should be adapted to specific organizational contexts and jurisdictions. As regulatory frameworks continue to evolve, organizations should maintain ongoing legal and technical advice to ensure their compliance approaches remain current and appropriate.

What happens if my company doesn’t comply with AI regulations?

Non-compliance with AI regulations can result in significant consequences varying by jurisdiction and violation severity. Financial penalties represent the most immediate risk, with frameworks like the EU AI Act imposing fines up to 7% of global annual turnover for serious violations—exceeding even GDPR penalties. Regulatory authorities may also issue enforcement notices requiring system modifications or withdrawal from market, creating substantial operational disruption and remediation costs.

Beyond direct regulatory consequences, non-compliance creates significant business risks. These include reputational damage affecting customer trust, investor concerns regarding governance effectiveness, potential litigation from affected individuals, and competitive disadvantage as compliant competitors gain certification advantages. The combination of regulatory and business impacts makes compliance a strategic imperative rather than merely a legal obligation.

Do AI regulations apply to all types of machine learning systems?

AI regulations typically apply to a broad range of systems using machine learning techniques, though specific scope definitions vary between frameworks. Most regulations adopt functional definitions focused on a system’s capabilities and autonomy rather than specific technical approaches. This means that various machine learning approaches—supervised, unsupervised, reinforcement learning, deep learning, and others—generally fall within regulatory scope when used for covered applications.

However, regulatory requirements often vary based on a system’s risk level and application context rather than its technical approach. A relatively simple machine learning model used in a high-risk context like healthcare diagnostics may face stringent requirements, while a sophisticated deep learning system used for low-risk applications might face minimal obligations. Organizations should assess regulatory applicability based primarily on use case and potential impact rather than technical architecture.

Some regulations include specific provisions addressing unique characteristics of certain AI approaches. For instance, generative AI systems often face specific transparency requirements regarding synthetic content, while reinforcement learning systems may encounter particular safety testing expectations. Organizations should examine whether their specific technical approaches trigger any specialized regulatory provisions beyond those applying to AI systems generally.

How do small businesses meet AI compliance requirements?

Small businesses face particular challenges in meeting AI regulatory requirements, including limited compliance resources, restricted technical expertise, and competing priorities. However, several approaches can help smaller organizations navigate compliance efficiently. Regulatory frameworks often include proportionality principles recognizing organizational size and capacity, allowing scaled approaches for smaller entities while maintaining essential protections.

Practical strategies for small businesses include focusing compliance efforts on higher-risk applications, utilizing open-source compliance tools and frameworks, leveraging industry associations for shared guidance, and considering compliance-as-a-service offerings from specialized providers. For particularly complex requirements, small businesses might engage external expertise for specific activities like impact assessments or documentation development while maintaining core governance internally.

Small businesses should also consider compliance requirements when selecting AI vendors and partners. By choosing providers that offer robust compliance documentation, transparent model information, and appropriate contractual assurances, small businesses can significantly reduce their compliance burden. This approach is particularly valuable when using foundation models or other complex AI systems beyond internal development capacity.

Can AI regulations really keep up with rapid technological advances?

The challenge of regulatory pace versus technological development represents a central tension in AI governance. Traditional regulatory approaches struggle to match AI’s rapid evolution, potentially creating frameworks that are outdated before implementation or so vague that they provide limited practical guidance. However, several emerging regulatory approaches aim to address this challenge while providing meaningful oversight.

Risk-based frameworks focus on potential impacts rather than specific technologies, allowing regulations to remain relevant as technical approaches evolve. Principle-based requirements establish outcomes to be achieved without prescribing specific implementation methods, providing flexibility for technological innovation. Regulatory sandboxes offer controlled environments for testing novel applications under regulatory supervision, facilitating both innovation and appropriate oversight.

The integration of technical standards within regulatory frameworks further addresses the pace challenge. By referencing standards that can evolve more rapidly than legislation, regulations can maintain technological currency while providing legal certainty. This approach creates a dynamic governance system where high-level principles remain stable while implementation guidance evolves alongside technological development.

Where can I find resources to help with AI compliance?

Numerous resources are available to support organizations navigating AI compliance requirements. Regulatory authorities increasingly publish detailed guidance documents explaining requirements and implementation approaches. For instance, the EU Commission provides extensive guidance on the AI Act, while NIST offers detailed materials on its AI Risk Management Framework. These official resources provide authoritative interpretation of regulatory expectations.

Industry associations, standards bodies, and professional organizations offer complementary resources addressing practical implementation challenges. The IEEE Global Initiative provides detailed implementation guidance for ethical AI principles. Industry-specific associations develop sector-relevant interpretations of broader requirements. Professional organizations for privacy, security, and AI practitioners offer specialized guidance in their respective domains.

Join the Conversation